Christian Jakob, Monash University

As Earth continues to warm, Australia faces some important decisions.

For example, where should we place solar and wind energy infrastructure to reliably supply Australians with electricity? How can we secure our food production and freshwater supply? Should we invest in bigger dams to increase our resilience to drought, or better flood mitigation to manage more intense rainfall?

Deciding on the best path forward depends on having reliable and detailed information about about how wind, water and sunlight will behave in our future. This information is provided by climate models, large computer simulations of Earth that are based on the fundamental laws of physics and contain everything from the Sun’s radiation, the carbon cycle and clouds to the ocean circulation in mathematical equations.

Running these models requires the most powerful computers available – also known as supercomputers – as well as large amounts of space to store the model results for use by governments, businesses and scientists alike.

But right now, Australia’s supercomputers are falling behind the rest of the world – and this constitutes a serious risk to our ability to mitigate and adapt to climate change.

What is a supercomputer?

What makes a computer a supercomputer is its computing size and as a result, its ability to perform a huge number of calculations in a very short time.

Australia has two main national supercomputers for research: Gadi and Setonix.

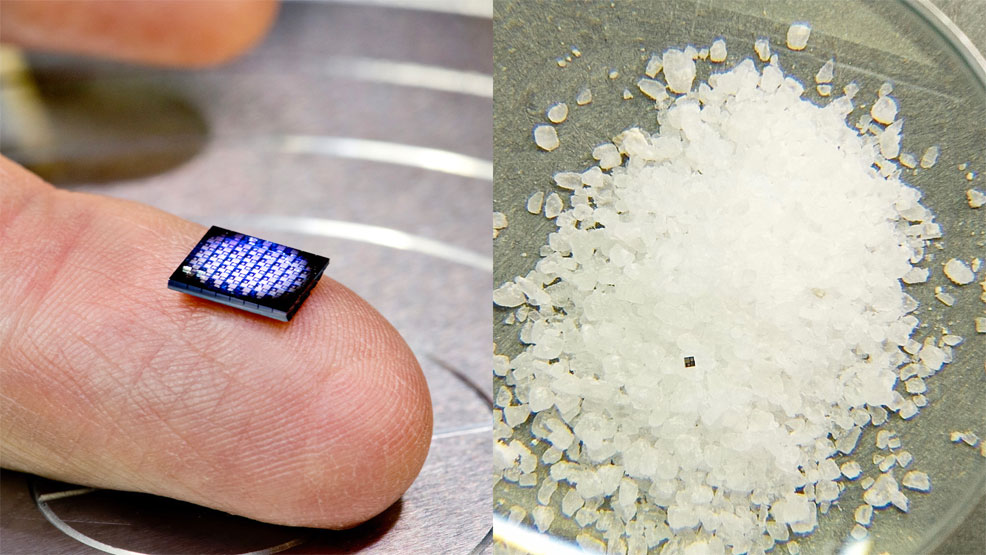

Gadi, located at the National Computational Infrastructure at the Australian National University in Canberra, is the main machine used in climate computing in Australia. It contains a vast number of computer chips known as central processing units (CPUs) and graphical processing units (GPUs). It has more than 250,000 CPUs and 640 GPUs. It is the CPUs that have made Gadi the Australian climate computer of choice.

Compare this with my humble Macbook Pro M3, which effectively sports 11 CPUs and 12 GPUs, and you understand why Gadi is called a supercomputer.

There has always been a strong connection between supercomputing and climate modelling, with climate models steadily improving as scientists access bigger and better supercomputers.

The secret lies in being able to divide Earth into finer and finer pieces and adding more of the important processes that affect our weather and climate. Both enhance the reliability of the model results.

While most climate models divide Earth into a grid of squares roughly 100km in size, the most advanced global climate models today simulate the behaviour of Earth’s atmosphere, ocean, land and ice using a grid of only a few kilometres. It’s like going from a grainy black and white television to an ultra high-definition one.

Doing so requires the most advanced supercomputers. These include LUMI in Europe and the Frontier machine in the United States.

These big machines aren’t just tools for climate scientists. They also underpin the operational delivery of climate information to all sectors of society safeguarding property and lives in the process.

A kilometre-scale climate modelling system for societal applications has just been developed in the European Union. Known as the “Climate Change Adaptation Digital Twin”, it represents a major leap forward in our understanding of how climate change will impact Earth – and our ability to respond to it.

How does Australia stack up globally?

So how does Australia stack up in the quest to have a supercomputer that can produce the best climate information possible to future-proof our nation?

The Gadi supercomputer is currently ranked 179th in the world. It was in 24th position in 2020, when it was introduced.

For comparison, the Frontier supercomputer is ranked 2nd. The LUMI supercomputer is ranked 9th. Topping the list is El Capitan supercomputer in the US.

In May 2025 the federal government announced A$55 million to renew Gadi.

This is roughly two-thirds of the funding it received for its previous upgrade in 2019, and will only lead to a moderate increase in our climate computing abilities – well behind the rest of the world.

A major disadvantage

This puts Australia at a major disadvantage when it comes to planning for the future.

But why can’t we just use the more advanced models and supercomputers developed elsewhere?

First, apart from our own ACCESS global model, all climate models are built in the Northern Hemisphere. This means they are calibrated to do well there, with limited attention paid to our region.

Second, making good decisions about Australia’s future requires us to be self-sufficient when it comes to simulating the climate system using scenarios defined by us and relevant to our region.

This has recently been brought into sharp focus with recent cuts to climate science in the US.

In short, good decisions on our future require self-sufficiency in climate modelling. We actually have the software (the ACCESS model itself) to this, but the current and planned supercomputing and data infrastructure to run it on is simply outdated.

An ambitious solution

Learning lessons from the international community, it is time to think big and integrate the power of existing climate modelling with the emerging abilities of artificial intelligence (AI) and machine learning to build a “digital twin” of Australia.

With weather and climate at its heart, the digital twin can enable directly integrated new major features of Australia such as its ecosystems, cities and energy and transport systems.

The cost of such a facility and the research and operational need to enable it is large. But the cost of poor decisions based on outdated information could be even higher.![]()

Christian Jakob, Director, ARC Centre of Excellence for the Weather of the 21st Century, Monash University

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Meta’s Neuromotor Interface – credit, Reality Labs, via Springer Press

Meta’s Neuromotor Interface – credit, Reality Labs, via Springer Press