A guide: Uranium and the nuclear fuel cycle Yellowcake (Image: Dean Calma/IAEA)

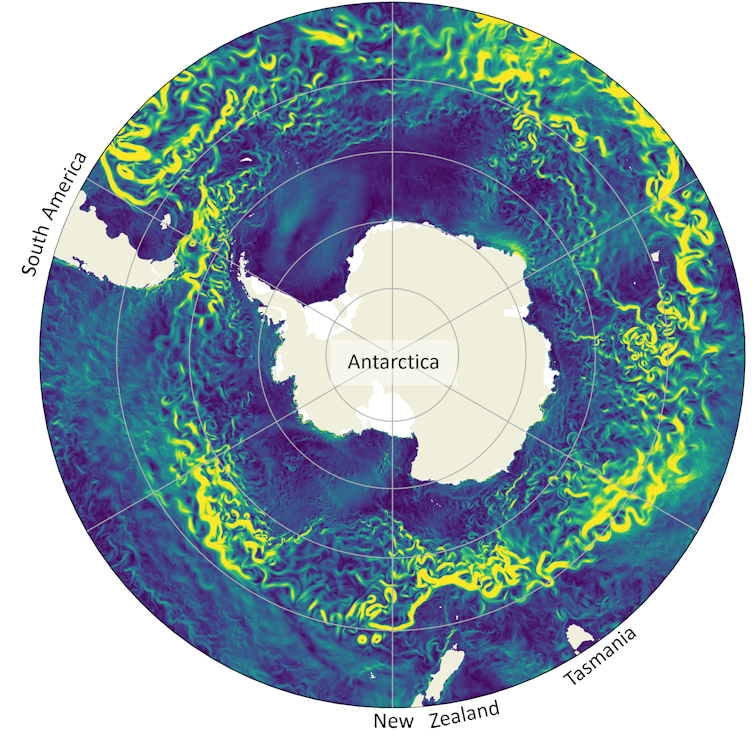

The nuclear fuel cycle is the series of industrial processes that turns uranium into electricity. Claire Maden takes a look at the steps that make up the cycle, the major players and the potential pinch-points.

The nuclear fuel cycle starts with the mining of uranium ore and ends with the disposal of nuclear waste. (Ore is simply the naturally occurring material from which a mineral or minerals of economic value can be extracted).

We talk about the front end of the fuel cycle - that is, the processes needed to mine the ore, extract uranium from it, refine it, and turn it into a fuel assembly that can be loaded into a nuclear reactor - and the back end of the fuel cycle - what happens to the fuel after it's been used. If the used fuel is treated as waste, and disposed of, this is known as an "open" fuel cycle. It can also be reprocessed to recover uranium and other fissile materials which can be reused in what is known as a "closed" fuel cycle.

The World Nuclear Association's Information Library has a detailed overview of the fuel cycle here. But in a nutshell, the front end of the fuel cycle is made up of mining and milling, conversion, enrichment and fuel fabrication. Fuel then spends typically about three years inside a reactor, after which it may go into temporary storage before reprocessing, and recycling before the waste produced is disposed of - these steps are the back end of the fuel cycle.

The processes that make up the fuel cycle are carried out by companies all over the world. Some companies specialise in one particular area or service; some offer services in several areas of the fuel cycle. Some are state-owned, some are in the private sector. Underpinning all these separate offerings is the transport sector to get the materials to where they need to be - and overarching all of it is the global market for nuclear fuel and fuel cycle services.

(Image: World Nuclear Association)

How do they do it?

Let's start at the very front of the front end: uranium mining.

Depending on the type of mineralisation and the geological setting, uranium can be mined by open pit or underground mining methods, or by dissolving and recovering it via wells. This is known as in-situ recovery - ISR - or in-situ leaching, and is now the most widely used method: Kazakhstan produces more uranium than any other country, and all by in-situ methods.

Uranium mined by conventional methods is recovered at a mill where the ore is crushed, ground and then treated with sulphuric acid (or a strong alkaline solution, depending on the circumstances) to dissolve the uranium oxides, a process known as leaching.

Whether the uranium was leached in-situ or in a mill, the next stage of the process is similar for both routes: the uranium is separated by ion exchange.

Ion exchange is a method of removing dissolved uranium ions from a solution using a specially selected resin or polymer. The uranium ions bind reversibly to the resin, while impurities are washed away. The uranium is then stripped from the resin into another solution from which it is precipitated, dried and packed, usually as uranium oxide concentrate (U3O8) powder - often referred to as "yellowcake".

More than a dozen countries produce uranium, although about two thirds of world production comes from mines in three countries - Kazakhstan, Canada and Australia Namibia, Niger and Uzbekistan are also significant producers.

The next stage in the process is conversion - a chemical process to refine the U3O8 to uranium dioxide (UO2), which can then be converted into uranium hexafluoride (UF6) gas. This is the raw material for the next stage of the cycle: enrichment.

Unenriched, or natural, uranium contains about 0.7% of the fissile uranium-235 (U-235) isotope. ("Fissile" means it's capable of undergoing the fission process by which energy is produced in a nuclear reactor). The rest is the non-fissile uranium-238 isotope. Most nuclear reactors need fuel containing between 3.5% and 5% U-235. This is also known as low-enriched uranium, or LEU. Advanced reactor designs that are now being developed - and many small modular reactors - will require higher enrichments still. This material, containing between 5% and 20% U-235 - is known as high-assay low-enriched uranium, or HALEU. And some reactors - for example the Canadian-designed Candu - use natural uranium as their fuel and don’t require enrichment services. But more of that later.

Enrichment increases the concentration of the fissile isotope by passing the gaseous UF6 through gas centrifuges, in which a fast spinning rotor inside a vacuum casing makes use of the very slight difference in mass between the fissile and non-fissile isotopes to separate them. As the rotor spins, the concentration of molecules containing heavier, non-fissile, isotopes near the outer wall of the cylinder increases, with a corresponding increase in the concentration of molecules containing the lighter U-235 isotope towards the centre. World Nuclear Association’s information paper on uranium enrichment contains more details about the enrichment process and technology.

Enriched uranium is then reconverted from the fluoride to the oxide - a powder - for fabrication into nuclear fuel assemblies.

So that's the front end of the fuel cycle. Then, there is the back end: the management of the used fuel after its removal from a nuclear reactor. This might be reprocessed to recover fissile and fertile materials in order to provide fresh fuel for existing and future nuclear power plants.

In-situ recovery (in-situ leach) operations in Kazakhstan (Image: Kazatomprom)

Who, where and when

That's a pared-down look at the processes that make up the front end of the fuel cycle - the "how" of getting uranium from the ground and into the reactor. But how does that work on a global scale when much of the world's uranium is produced in countries that do not (yet) use nuclear power? And that brings us to: the market.

The players in the nuclear fuel market are the producers and suppliers (the uranium miners, converters, enrichers and fuel fabricators), the consumers of nuclear fuel (nuclear utilities, both public and privately owned), and various other participants such as agents, traders, investors, intermediaries and governments.

As well as the uranium, there is also the market for the services needed to turn it into fuel assemblies ready for loading into a power plant. And the nuclear fuel cycle's international dimension means that uranium mined in Australia, for example, may be converted in Canada, enriched in the UK and fabricated in Sweden, for a reactor in South Africa. In practice, nuclear materials are often exchanged - swapped - to avoid the need to transport materials from place to place as they go through the various processing stages in the nuclear fuel cycle.

Uranium is traded in two ways: the spot market, for which prices are reported daily, and mid- to long-term contracts, sometimes referred to as the term market. Utilities buy some uranium on the spot market - but so do players from the financial community. In recent years, such investors have been buying physical stocks of uranium for investment purposes.

Most uranium trade is via 3-15 year long-term contracts with producers selling directly to utilities at a higher price than the spot market - although prices specified in term contracts tend to be tied to the spot price at the time of delivery. And like all mineral commodity markets, the uranium market tends to be cyclical, with prices that rise and fall depending on demand and perceptions of scarcity.

The spot market in uranium is a physical market, with traders, brokers, producers and utilities acting bilaterally. Unlike many other commodities such as gold or oil, there is no formal exchange for uranium. Uranium price indicators are developed and published by a small number of private business organisations, notably UxC, LLC and Tradetech, both of which have long-running price series.

Likewise, conversion and enrichment services are bought and sold on both spot and term contracts, but fuel fabrication services are not procured in quite the same way. Fuel assemblies are specifically designed for particular types of reactors and are made to exacting standards and regulatory requirements. In the words of World Nuclear Association's flagship fuel cycle report, nuclear fuel is not a fungible commodity, but a high-tech product accompanied by specialist support.

Drums of uranium from Cameco's Key Lake mill are transported to the company's facilities at Blind River, Ontario, for further processing (Image: Cameco)

Bottlenecks and challenges

Uranium is mined and milled at many sites around the world, but the subsequent stages of the fuel cycle are carried out in a limited number of specialised facilities.

Anyone unfamiliar with the sector might wonder why all the different stages of mining, enrichment, conversion and fabrication are not done at the same location. Simply put, conversion and enrichment services tend to be centralised because of the specialised nature and the sheer scale of the plants, and also because of the international regime to prevent the risk of nuclear weapons proliferation.

Commercial conversion plants are found in Canada, China, France, Russia and the USA.

Uranium enrichment is strategically sensitive from a non-proliferation standpoint so there are strict international controls to ensure that civilian enrichment plants are not used to produce uranium of much higher enrichment levels (90% U-235 and above) that could be used in nuclear weapons. Enrichment is also very capital intensive. For these reasons, there are relatively few commercial enrichment suppliers operating a limited number of facilities worldwide.

There are three major enrichment producers at present: Orano, Rosatom, and Urenco operating large commercial enrichment plants in France, Germany, Netherlands, the UK, USA, and Russia. CNNC is a major domestic supplier in China.

So the availability of capacity, particularly in conversion and enrichment, can potentially lead to bottlenecks and challenges to the nuclear fuel supply chain. Likewise, interruptions to transport routes and geopolitical issues can also potentially impact the supply of nuclear materials. For example, current US enrichment capacity is not sufficient to fulfil all the requirements of its domestic nuclear power plants, and the USA relies on overseas enrichment services. But in 2024, US legislation was enacted banning the import of Russian-produced LEU until the end of 2040, with Russia placing tit-for-tat restrictions on exports of the material to the USA.

The fabrication of that LEU into reactor fuel is the last step in the process of turning uranium into nuclear fuel rods. Fuel rods are batched into assemblies that are specifically designed for particular types of reactors and are made to exacting standards by specialist companies. Most of the main fuel fabricators are also reactor vendors (or owned by them), and they usually supply the initial cores and early reloads for reactors built to their own designs. The World Nuclear Association information paper on Nuclear Fuel and its Fabrication gives a deeper dive into this sector.

So - that’s an introduction to the nuclear fuel cycle - and we haven't even touched on the so-called back end, which is what happens to that fuel after it has spent around three years in the reactor core generating electricity, and the ways in which used fuel could be recycled to continue providing energy for years to come,

A guide: Uranium and the nuclear fuel cycle

![]()

Photo by Tambako The Jaguar, CC license

Photo by Tambako The Jaguar, CC license Inge Wallmrod

Inge Wallmrod