Hi Grace!

Thanks for this great question. It’s an age-old dilemma that has left many people scratching their heads.

From an evolutionary perspective, both answers could be considered true! It all depends on how you interpret the question.

The case for the egg

When the first vertebrates – that is, the first animals with backbones – came out of the sea to live on land, they faced a challenge.

Their eggs, similar to those of modern fish, were covered only in a thin layer called a membrane. The eggs would quickly dry up and die when exposed to air. Some animals such as amphibians (the group that includes frogs and axolotls) solved this problem by simply laying their eggs in water – but this limited how far inland they could travel.

It was the early reptiles that evolved a key solution to this problem: an egg with a protective outer shell. The first egg shells would have been soft and leathery like the eggs of a snake or a sea turtle. Hard-shelled eggs, such as those of birds, likely appeared much later.

Some of the oldest known hard-shelled eggs appear in the fossil record during the Early Jurassic period, roughly 195 million years ago. Dinosaurs laid these eggs, although reptiles such as crocodiles were also producing hard-shelled eggs during the Jurassic.

As we know now, it was a line of dinosaurs that eventually gave rise to the many species of birds we see today, including the chicken.

Chickens belong to an order of birds known as the Galliformes, which includes other ground-dwelling birds such as turkeys, pheasants, peafowl and quails.

Specifically, chickens are part of a galliform genus called Gallus, which is thought to have started changing into its modern species between 6 million and 4 million years ago in South-East Asia. Domestic chickens only began appearing some time within the past 10,000 years.

This means hard-shelled eggs like the ones chickens lay are older than chickens themselves by almost 200 million years. So problem solved, right?

Well, it’s a matter of perspective.

The case for the chicken

If we interpret the question as referring specifically to chicken eggs – and not all eggs – the answer is very different.

Unlike most species of animals, the modern chicken didn’t evolve naturally through evolution. Rather, it’s the result of domestication: a process where humans selectively breed animals to create individuals that are more tame and have more desirable traits.

The most famous example is the domestication of wolves into dogs by humans. Wolves and dogs have almost entirely the same DNA, but are very different in how they look and behave. Dogs came from wolves, and so scientists consider dogs to be a subspecies of wolf.

Similarly, chickens came from a species called the red junglefowl, which is found across Southern and South-East Asia. Researchers think red junglefowl were first drawn to humans thousands of years ago, when people started farming rice and other cereal grains.

This closeness then allowed domestication to take place. Over many generations the descendants of these tamed birds became their own subspecies.

Technically, the first chicken would have hatched from the egg of a selectively bred junglefowl. It was only when this chicken matured and started reproducing that the first true chicken eggs were laid.

So which answer is the better one?

That’s completely up to you to decide. As is the case with many dilemmas, the whole point of the question is to make you think – not necessarily to come up with the perfect answer.

In this case, evolutionary biology allows us to make an argument for both sides – and that is one of the wonderful things about science.![]()

Ellen K. Mather, Adjunct Associate Lecturer in Palaeontology, Flinders University

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Shutterstock/Edited by The Conversation

Shutterstock/Edited by The Conversation

Leap Day is coming.

Leap Day is coming.

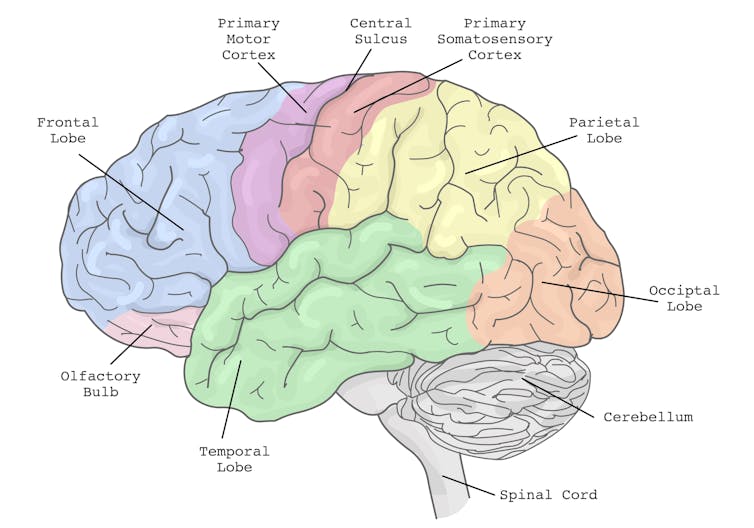

The brain’s action planning centres are in the frontal cortex (blue), with reciprocal connections to parietal cortex (yellow) and the cerebellum (grey), among others.

The brain’s action planning centres are in the frontal cortex (blue), with reciprocal connections to parietal cortex (yellow) and the cerebellum (grey), among others.  Having a conversation while driving slows your reaction time.

Having a conversation while driving slows your reaction time.  Our ability to multi-task reduces with age.

Our ability to multi-task reduces with age.